Optimizing Data Center Performance with RoCE Switches

In the realm of data center optimization, the performance of networking equipment plays a pivotal role in achieving seamless operations and high efficiency. One technology that has been gaining traction for its ability to significantly enhance data center performance is RoCE switches. In this blog post, we will delve into the RoCE technology, discuss its advantages, and deployment considerations.

The Importance of Data Center Performance

Data center performance is crucial for ensuring seamless operation, high availability, and optimal user experience. In today's fast-paced digital landscape, where data is generated and processed at unprecedented speeds, the need for efficient networking solutions cannot be overstated. The performance of data center networking equipment, including switches, directly impacts the reliability and scalability of data center operations.

Understanding RoCE Technology

RoCE, or RDMA over Converged Ethernet, is a cutting-edge technology that leverages Remote Direct Memory Access (RDMA) protocol to enable high-speed, low-latency data transfers over Ethernet networks.

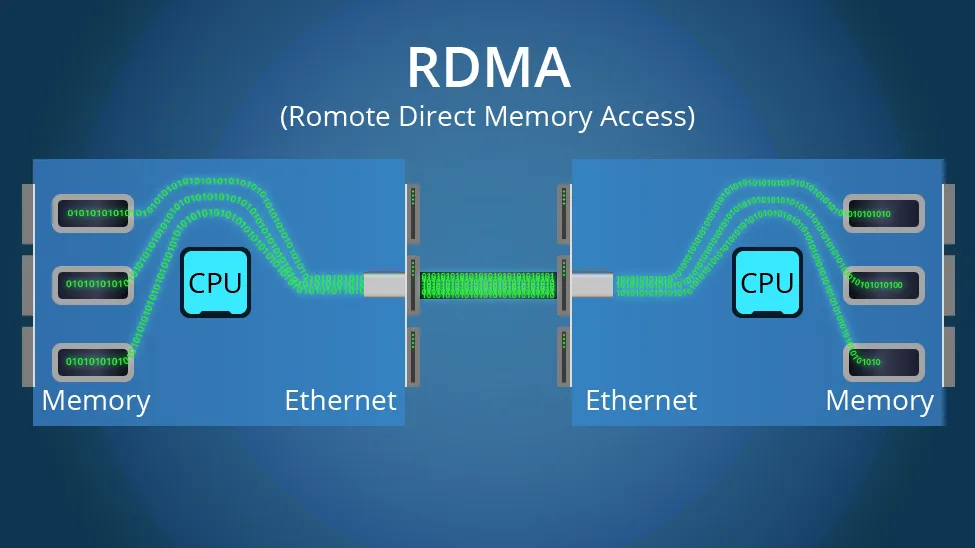

RDMA

Remote Direct Memory Access (RDMA) is a technology that enables high-speed data transfer between servers and storage devices without involving the CPU. By bypassing traditional networking protocols such as TCP/IP, RDMA minimizes latency and CPU overhead, resulting in faster data transfers and improved system performance.

RoCE

RoCE extends the benefits of RDMA to Ethernet networks, allowing organizations to leverage their existing Ethernet infrastructure while enjoying the performance advantages of RDMA. RoCE utilizes specialized network adapters and switches to enable low-latency, high-throughput data communication within data center environments.

There are different versions of RoCE, including RoCE v1 and RoCE v2, each offering varying features and performance capabilities. RoCE v1 operates over lossless Ethernet networks, while RoCE v2 introduces enhancements such as DCB (Data Center Bridging) support for lossless Ethernet and improved congestion control mechanisms.

''Also Check- RoCE Technology in High-Performance Computing: Insights and Applications

Benefits of RoCE Switches in Data Centers

RoCE switches play a pivotal role in optimizing data center performance by leveraging RDMA technology to deliver low latency, high throughput, CPU offload, and scalability benefits.

Low Latency

RoCE switches facilitate direct memory access between servers and storage devices, minimizing network latency and enabling near-real-time data access and processing. This low-latency communication is critical for applications requiring rapid data transfer, such as high-frequency trading or real-time analytics.

High Throughput

RoCE switches support high-speed data transfer rates, enabling organizations to achieve unparalleled throughput for data-intensive workloads. By leveraging RDMA technology, RoCE switches optimize bandwidth utilization and eliminate network bottlenecks, resulting in enhanced data center performance and efficiency.

CPU Offload

Traditional networking protocols rely heavily on CPU processing for data transmission and management. In contrast, RoCE offloads these tasks to specialized network adapters and switches, freeing up CPU resources for other critical computing tasks. This CPU offload capability enhances overall system performance and scalability, particularly in virtualized or cloud environments.

Scalability

RoCE switches offer seamless scalability, allowing organizations to expand their data center infrastructure without sacrificing performance or efficiency. With support for large-scale deployments and flexible network configurations, RoCE switches empower businesses to adapt to evolving workload demands and infrastructure requirements.

How to Implement RDMA in Data Centers

To enable RDMA in data centers, install a network card driver and a RoCE network adapter. Every ethernet NIC necessitates a RoCE network adapter card. There are two options: for network switches, opt for those supporting a PFC (Priority Flow Control) network operating system; for servers or hosts, employ a network card.

Considerations for Deploying RoCE Switches

-

Infrastructure requirements: Ensure your network infrastructure can support high-speed, low-latency communication needed for RoCE switches.

-

Configuration best practices: Optimize RoCE switch configurations to maximize performance and minimize latency by fine-tuning parameters like buffer sizes and packet prioritization.

-

Interoperability: Ensure compatibility with existing network infrastructure and hardware to avoid integration challenges and performance issues.

-

Security considerations: Implement measures such as network segmentation and encryption to protect sensitive data and mitigate security risks associated with RoCE deployments.

Optimize Data Center Performance with RoCE Switch

As RoCE technology continues to evolve, there are several emerging trends and developments shaping its impact on data center performance. These include advancements in hardware support, software optimizations, and standardization efforts aimed at further enhancing RoCE's capabilities and scalability. FS provides a 400G RoCE switch that allows organizations to leverage the RoCE innovations to optimize their data center performance effectively.

You might be interested in

Email Address

-

PoE vs PoE+ vs PoE++ Switch: How to Choose?

Mar 16, 2023