Explainable AI (XAI)

What is explainable AI, or XAI?

Explainable AI refers to a collection of procedures and techniques that enable users to comprehend and have confidence in the outcomes and outputs generated by machine learning (ML) algorithms used in AI systems. The accompanying explanations are designed to cater to different stakeholders, including users, operators, and developers. They aim to address a wide range of concerns and challenges associated with user acceptance, governance, and system development. This ability to provide explanations is fundamental to establishing trust and confidence in AI, which is vital for widespread adoption and the realization of its benefits. Related and emerging initiatives in this domain encompass trustworthy AI and responsible AI.

How is explainable AI implemented?

The implementation of explainable AI adheres to four key principles outlined by the U.S. National Institute of Standards and Technology (NIST):

-

Explanation: AI systems provide accompanying evidence or reasoning for all their outputs.

-

Understandability: Explanations are designed to be meaningful and comprehensible to individual users.

-

Explanation Accuracy: The provided explanation accurately reflects the process by which the AI system generates its output.

-

Knowledge Limits: The AI system operates within predefined conditions or achieves sufficient confidence levels before providing output.

NIST emphasizes that the complexity of explanations may vary and depends on the intended consumer. To illustrate, the agency offers five non-exhaustive categories of explanation types:

-

1. User Benefit

-

2. Societal Acceptance

-

3. Regulatory and Compliance

-

4. System Development

-

5. Owner Benefit

Why is explainable AI important?

Explainable AI holds significant importance in fostering, winning, and upholding trust in automated systems. Trust is a fundamental factor for the successful embrace of AI, particularly in the realm of AI for IT operations (AIOps). Without trust, the scale and complexity of modern systems would outpace the capabilities of manual operations and traditional automation.

Establishing trust brings to light the practice of "AI washing," where a product or service falsely claims to be AI-driven despite weak or non-existent AI involvement. This awareness assists both practitioners and customers in conducting their due diligence on AI. The establishment of trust and confidence in AI has a direct impact on the scope and speed of its adoption, thus determining the rate at which its benefits can be realized on a broader scale.

When entrusting any system to provide answers or make decisions, especially those with real-world implications, it becomes imperative to explain the decision-making process, the impact on outcomes, and the rationale behind necessary actions.

What problem(s) does explainable AI solve?

Numerous AI and ML models are often perceived as opaque, leading to unexplainable outputs. The ability to uncover and elucidate the reasoning behind the chosen paths or the generation of outputs is crucial for establishing trust, facilitating development, and promoting the adoption of AI technologies.

By illuminating the data, models, and processes involved, operators and users can gain valuable insights and observability into these systems. This transparency enables optimization through transparent and valid reasoning. Most significantly, explainability facilitates the identification and communication of any flaws, biases, and risks, allowing for their mitigation or elimination.

How explainable AI creates transparency and builds trust?

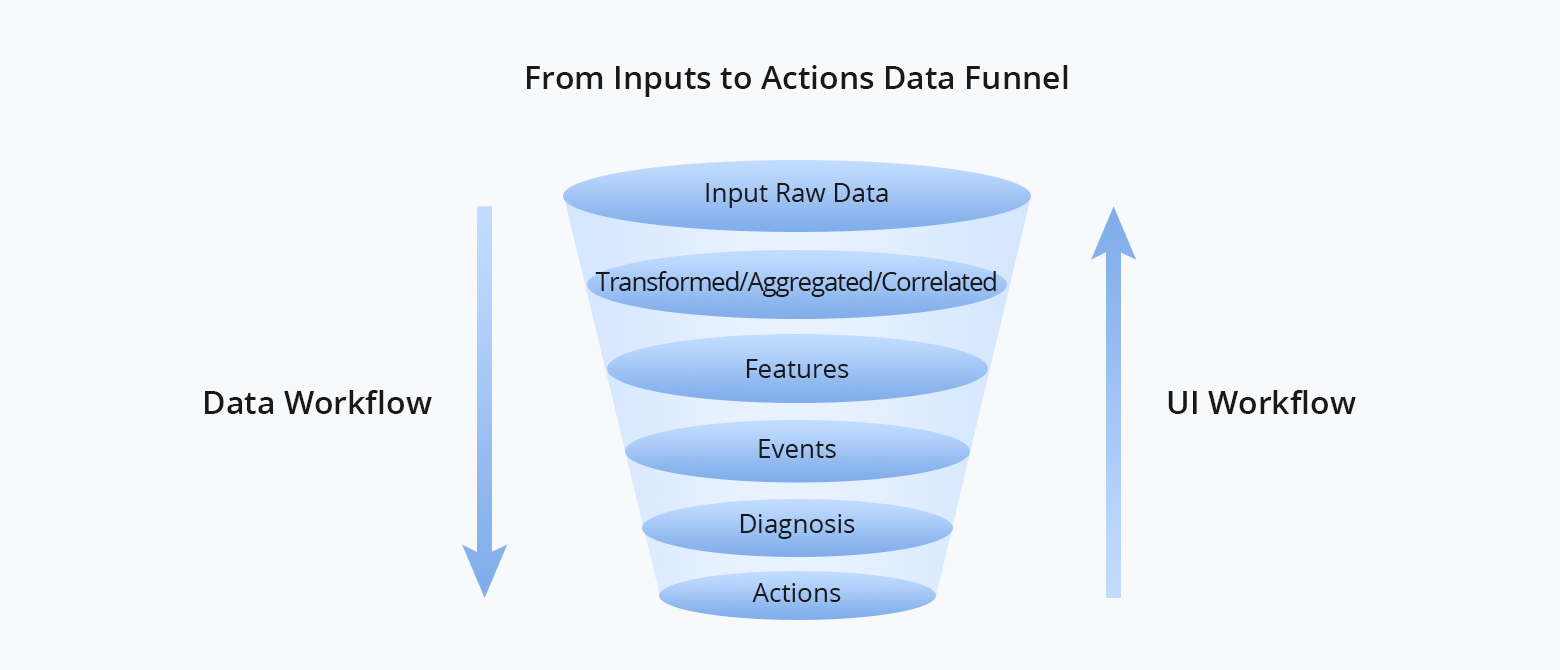

In order to be valuable, initial raw data should ultimately lead to either a suggested course of action or its execution. However, expecting users to trust a fully autonomous workflow right from the start can often be too big of a leap. It is recommended to provide users with the opportunity to navigate through supporting layers step by step, starting from the foundational level. By gradually delving into events tier by tier, the user interface (UI) workflow allows for the exploration of layers all the way down to the raw inputs. This approach promotes transparency and builds trust.

A framework that empowers domain experts to delve deeper and satisfy their skepticism, while also enabling novices to explore as far as their curiosity takes them, allows both beginners and experienced professionals to establish trust while increasing their productivity and knowledge. This engagement fosters a virtuous cycle that further refines AI/ML algorithms, leading to continuous improvements in the system.

You might be interested in

Email Address

-

PoE vs PoE+ vs PoE++ Switch: How to Choose?

Mar 16, 2023